If you are looking for DevOps Interview Questions and answers, you are on a Right page and below are the 2020 Real-time DevOps Interview Questions. Go through all of them and Crack your Interview

Before diving into Interview questions learn more about DevOps by our blog What is DevOps?

1. What is DevOps?

The Term DevOps was Initiated by combining Operations and Development teams. DevOps is a mix of tools, that guide automation of complete Infrastructure. It is a mindset of IT, and it encourages, the communication and automation between developers and Certain IT operations.

2. Why do we need DevOps?

To get fast application development, and to meet the Requirments of users. DevOps is motivated by all agile processes, for automating the development, and operation processes. It helps in Delivering continuous applications to the end-users, for getting more benefits.

3. What are the Adoptions in DevOps Industry?

1. Take a Smart Approach to Automation.

2. Emphasize Quality Assurance Early.

3. Adopt Iteratively.

4. Understand and Address Your Unique Needs.

5. Make the Most of Metrics.

6. Embrace a DevOps Mindset.

4. What are the key aspects of DevOps?

1.Automated Delivery Pipeline.

2.Configuration Management.

3.Regular Integration.

4.Automated Monitoring & Health Checks.

5.The Firefighter Role.

6.Infrastructure as Code.

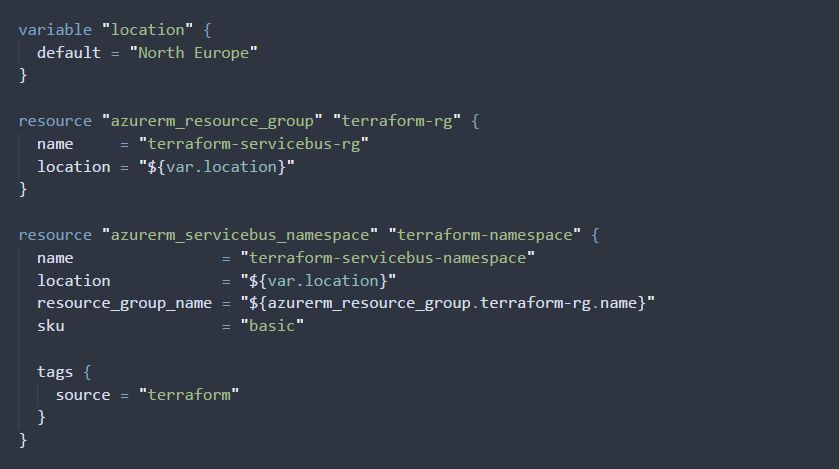

5. How would you explain the concept of “Infrastructure as Code”?

Infrastructure as code is the way how we manage Infrastructure, products such as Connection topology, Load balancers, Virtual Machines, Networks, with a Descriptive sample. That is by using the same version as DevOps, the team uses like source code. Infrastructure as code is defined to solve the issue of Environment.

6. What is the use of a chef in DevOps?

The chef is the best configuration management tool, to deal with machine setup on servers, virtual machines and in the cloud. So many companies utilize chef, software to manage their Infrastructure, that contains Facebook. The chef is made of ruby and Erlang programming languages.

[ Related Article – Explain Chef and its components? ]

7. What is the purpose of Git?

The main purpose of Git is to handle a project, with a combination of files, as they turn over time. It stores this information in a Data Design known as Repository. Git has a set of commit objects. It belongs to the third-generation version control tool.

[ Related Article – Git]

8. How DevOps is Helpful to Developers?

It helps Developers to make fast decisions and complete Applications that a user needs. DevOps is a mix of tools, so many developers analyze the data by using this tool to get accurate data and complete the task in Specified Time. Developers get more benefits by using DevOps.

9. Name the popular scripting language of DevOps?

10. Name some Agile Methods in DevOps?

1.Test-first programming.

2.Regular refactoring.

3.Continuous Integration.

4.Simple design.

5.Pair programming.

6. Sharing the codebase between programmers.

7.A single coding standard for all programmers.

8.A common “war-room” style work area.

11. Name some DevOps Tools?

1.BitBucket.

2.Sentry.

3.GitHub.

4.Ansible.

5.Vagrant.

6.Nagios.

7.Phantom.

8.Docker.

9.Jenkins.

10.Slack.

[Related Article – What is DevOps Tools?]

12. What is Scope for SSH?

SSH is simply restricted, we won’t allow Running “apt for getting an update” or “apt-get install”. We apply Ansible Recipes, to provision your environment, from scratch, we can not provide complete SSH Access.

13. What are the Benefits Of Devops With reference to Technical And Business Perspective?

1)Technical Benefits:

a) Software Delivery is continuous.

b) Reduces Complexity in problems.

c)Faster approach to resolve problems

d)Manpower is reduced.

2.Business Benefits:

a)Stable operating environments.

b)Advanced Communication and Collaboration.

c)Fast Delivery of Business Features.

14. What are Anti-patterns of DevOps?

1.DevOps Isn’t Feasible with Legacy Systems.

2.DevOps Gets Rid of Operations.

3.DevOps and Security Are Foes.

4.DevOps Is Only About Automation.

5. You Need a Dedicated DevOps Team.

6.DevOps Is All About the Tools.

7. Agile and DevOps Are the Same.

8.DevOps Is Merely Merging Development and Operations Teams.

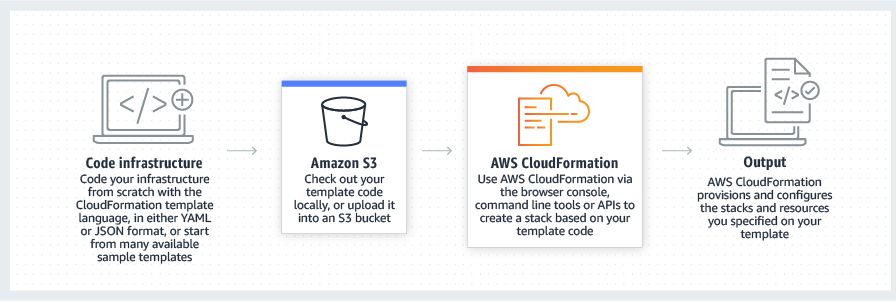

15. What is the Role of AWS in DevOps?

When the interviewer asks this question, go directly by explaining about AWS is a cloud service from Amazon, that makes sure scalability with computing and storage. with AWS, any company can develop and offer complex products and move applications on the cloud.

[ Related Article – Why AWS for DevOps? ]

16. What challenges exist when creating DevOps pipelines?

New features and Database migrations are some challenges in DevOps Pipelines. Features Flags are a simple way to deal with Incremental Product releases, which are Inside CI Environments.

17. What is CAMS in DevOps?

Culture.

Automation.

Measurement.

Sharing.

18. What is kubectl?

It is a Command-line Interface, for running commands on Kubernetes clusters. Where ctl is “control”. Kubectl used to Deploy apps, check and handle clusters.

19. How to Conduct an incident post-mortem for ongoing DevOps improvement?

An incident occurs when a software performance changes from the expectation. The post-mortem is defined by certain root cause analysis, there is no single approach for post-mortem. The elements vary from simple to highly formal, that depends, how big the business is.

Achieve your dream to become a DevOps Engineer through DevOps Online Training